Converting my book to a chatbot

Exploring ChatGPT and OpenAI in general

Note: this text, is, of course obsolete, as anything you can find on AIs that’s more than 6 months old.

One interesting thing I’ve noticed it that the GPT AI algorithm can be trained on book data and act as a chatbot to this book. Literally, you can ask a book some questions and it should answer you. It just so happens that I’ve just finished my book on the Europen startup scene (should be published in a couple of months), so I have just the right thing to experiment with.

When I’m not working at my AR startup, I’m usually a senior systems architect, and I’ve only watched from afar what’s going on in the AI space, though I do have a PhD in computer engineering and have done a lot of research projects, so I’m familiar with the terminology and some of the algorithms in this field.

Here’s what I’ve found.

APIs

OpenAI, the org (a curious combination of for-profit and not-for-profit companies) has historically published their algorithms and pre-trained models, right up to GPT-2. Anyone can freely use their GPT-2 models to do their own thing, but GPT-3, the most advanced one, is currently kept private, and can only be used through their (commerical) APIs. They say it’s too powerful to fall into the wrong hands, and from what I’ve seen, I kind of agree.

Their APIs offer 4 different quality models (apparently all for GPT-3), named A, B, C and D, or Ada, Babbage, Curie and DaVinci, in the order of the simplest and cheapest to use to the most complex one. It looks to me like DaVinci is the one used in ChatGPT, it’s the one closest to an actual human interaction.

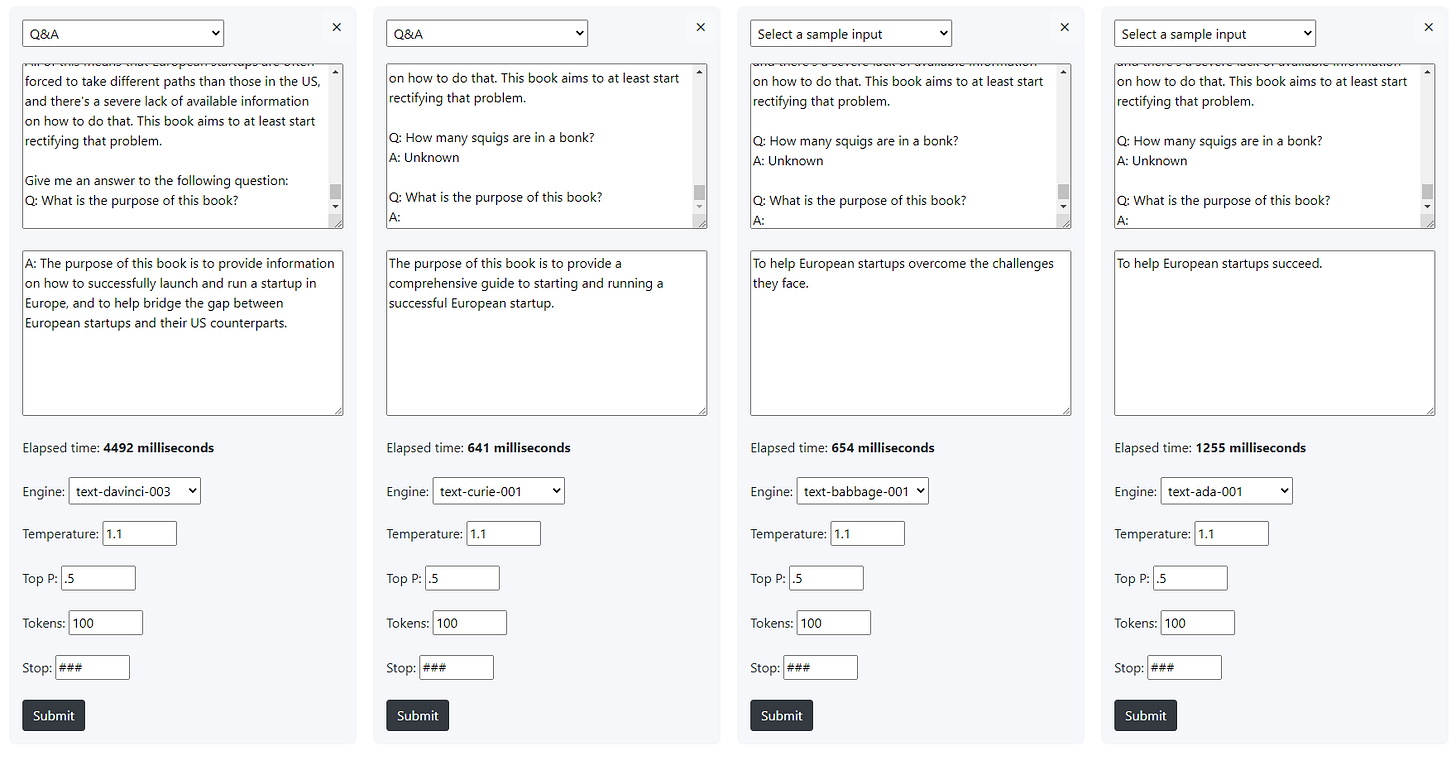

There’s a tool at https://gpttools.com/comparisontool which allows anyone to compare the outputs to different models, and it gives a striking example of what high-quality vs low-quality models looks like. Click to enlarge this screenshot and look at the contents of the second big text areas:

You can easily see how the quality degrades with the models D→C→B→A.

DaVinci doesn’t even need to be told an example of what to do, in terms of Q&A, while other models do need it.

What surprised me is that A, B & C models often just simply respond with “Unknown” for the exact same query parameters. I need to ask them repeatedly until I get any kind of response.

I find the most interesting parameter here is the “temperature” which governs how inventive the generated output should be. For DaVinci, as everyone has probably seen so far, this can lead to straight out fabrication, affecionally called “AI hallucinations.” For this purpose, I’ve set temperature very high - usually it’s recommended to float around 0.75 for a good combination of factualness and text flow. I did that to test out non-DaVinci models. With a high temperature, DaVinci started giving out grammatically perfect nonsense, while the other gave less grammatically correct nonsense.

I’m not sure about the top_P parameter, as described it looks like it should influence the selection of most commonly used words. Need to test it out more.

(I’m usually available for hire on these types of projects).