It happened that I’ve dealt with a number of MiniPCs so far this year (see my Minisforum AI370 review for an intro to MiniPCs), but I’ll review just one more, the GMKtec NucBox G9 NAS.

The sole reason this machine exists is to host 4 M.2 SSDs, either as 4x NVMe-type (or PCIe type), or 1x SATA-type. The CPU here is the Intel N150, which is the latest and greatest of Intel’s Celeron replacements.

I’m an industry veteran, I’ve been doing serious stuff with servers when we had them in actual on-premises racks, before virtualisation was a thing. This 4-core CPU, considered low-end at this time, absolutely dominates even some of the biggest servers we had around 10 years ago.

Another interesting thing about this device is that it has an on-board eMMC (“flash drive”) storage of 64 GB, completely independent from the M.2 storage slots, for the OS. This means that all of the M.2 drives can be used in some kind of a RAID, while this OS is sitting mostly read-only on the internal eMMC.

Since this is a SSD storage device, it doesn’t really have to have a lot of RAM, especially with the network ports being only 2.5 Gbit/s. Maximum network bandwidth for this configuration will be around 300 MB/s, or around 600 MB/s if you get fancy with load balancing. The 12 GB installed on the device are adequate.

Why?

A 4-bay M.2 SSD server isn’t really a common configuration, especially in the MiniPC form factor. At this time, it’s also not the most economical either, since HDDs are still about 2x cheaper than SSDs for comparable (large) sizes.

Still, there’s some elegance in avoiding the moving parts and the large form factors. Having a SSD NAS is actually quite nice.

Ever since Raspberry Pi B+ came out, I had one running as a home mini-server. Nothing serious, but a convenient little machine to SSH into and do some quick and dirty stuff, or to leave it working on something over night. I’ve upgraded that RPI over the years, and it eventually had various different roles. It was a web server for my blog (and not only for the blog - once I’ve run a search service for my tiny country’s official gazette just to prove the dataset is tiny enough to fit on a RPI), a Torrent client, a Git server, and all sorts of things.

For a couple of years now I was thinking of rebuilding it into a proper NAS which would have at least 2 SSDs for RAID. I came very close to buying the components, but getting all the extenders, enclosures and power supplies seemed like too much hassle and the cost kept creeping up.

When I found this MiniPC, a lightbulb just lit up and I forgot all the plans for the RPI-based NAS.

How I’m running it

Not much to say here - I’m running Ubuntu from the eMMC drive, and I’ve put 4x M.2 drives into a ZFS RAID-Z array.

Since the CPU is actually capable enough, I’ve also using the machine as a VM server for experiments I don’t want to run on the main system, with the Cockpit web UI for VM management (highly recommended!).

I’ve configured ZFS for compressing but not caching (no ARC) the file systems where the RAW VM images reside, and it works beautifully. I can snapshot the VMs whenever I want to. This is the first urban legend I want to address: ZFS ARC doesn’t have to have infinite RAM to work. In this case, I’ve put an upper limit on the ARC to 1.5 GB, and with only metadata caching enabled on the VM storage and ISO image storage file systems (primarycache=metadata), even that much isn’t used. I used to run ZFS on servers which had 2 GB of RAM total, back when ZFS was new (and on FreeBSD). SSDs are fast and have tiny latencies. Clever caching algorithms aren’t that important for them as they were for HDDs.

The cooling fiasco

This particular model, the NucBox G9, has a bad reputation for overheating. This CPU has a thermal shutdown function set at around 105 degrees C, and after I configured the OS and started running actual stuff on it, it could reach that temperature easily. Luckily, it wouldn’t just shutdown, but reboot.

And it’s somewhat true - the default configuration of heatsinks, BIOS and Linux settings cannot properly cool this CPU and SSDs. But the device has 2 fans, so it should be able to cool itself up to a point. While we can’t do much for SSDs except aim for ones that run cool, not fast (the network interfaces aren’t that fast anyway; I’m using GIGABYTE Gen3 2500E SSDs, which are PCIe 3.0 and are HMB devices, without the on-board memory cache), we can fine-tune the BIOS and Linux power management.

BIOS

For some reason, they default BIOS settings are way too conservative with turning on the fans out of the box. So, since most of the workarounds for this model involve strapping big fans to its case or completely nerfing its performance, I’ll present the details of how I configured it and what the effects of this configuration are.

First, the boot performance mode should be set to max non-turbo performance, and C states should be enabled (I’m using the “balanced” power profile in BIOS):

Next, in the Advanced settings, note the “Smart Fan Function”

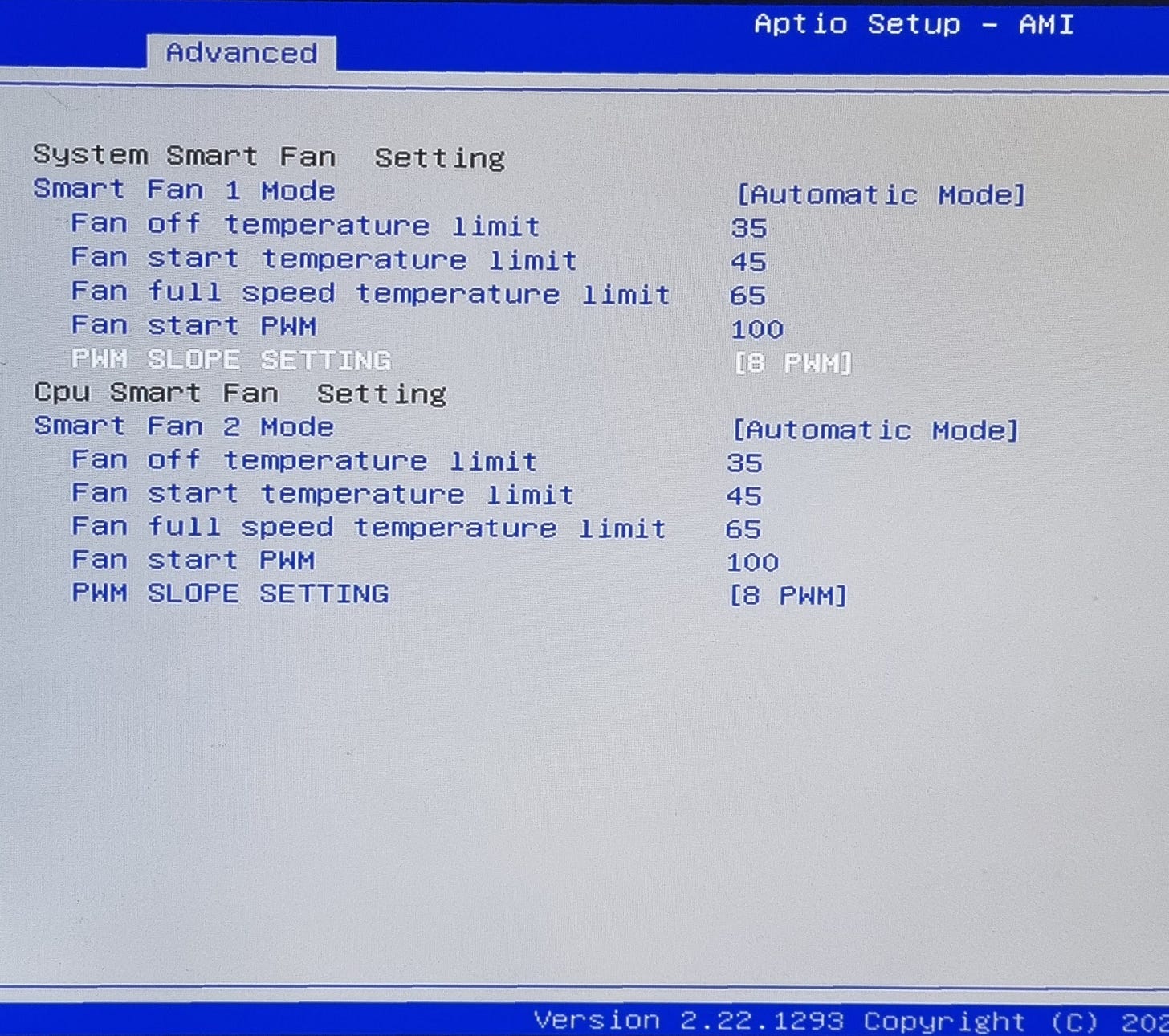

Here are the interesting settings, the same for both fans:

Fan mode: Automatic

Fan start temperature limit: 45 degrees C

Fan full speed temperature: 65 degrees C

Fan start PWM: 100 (see Wikipedia for what Pulse Mode Width modulation is; here, it’s driving the fans and the value ranges from 0-255. So 100 is almost half-speed for the fans).

PWM slope setting: 8 PWM (how many PWM steps to go each time the temperature monitor decides to increase or decrease fan strength. So 8 means that the first increase of the fan PWM will go from 100 to 108. Basically, it’s about how aggressively to ramp-up the fans).

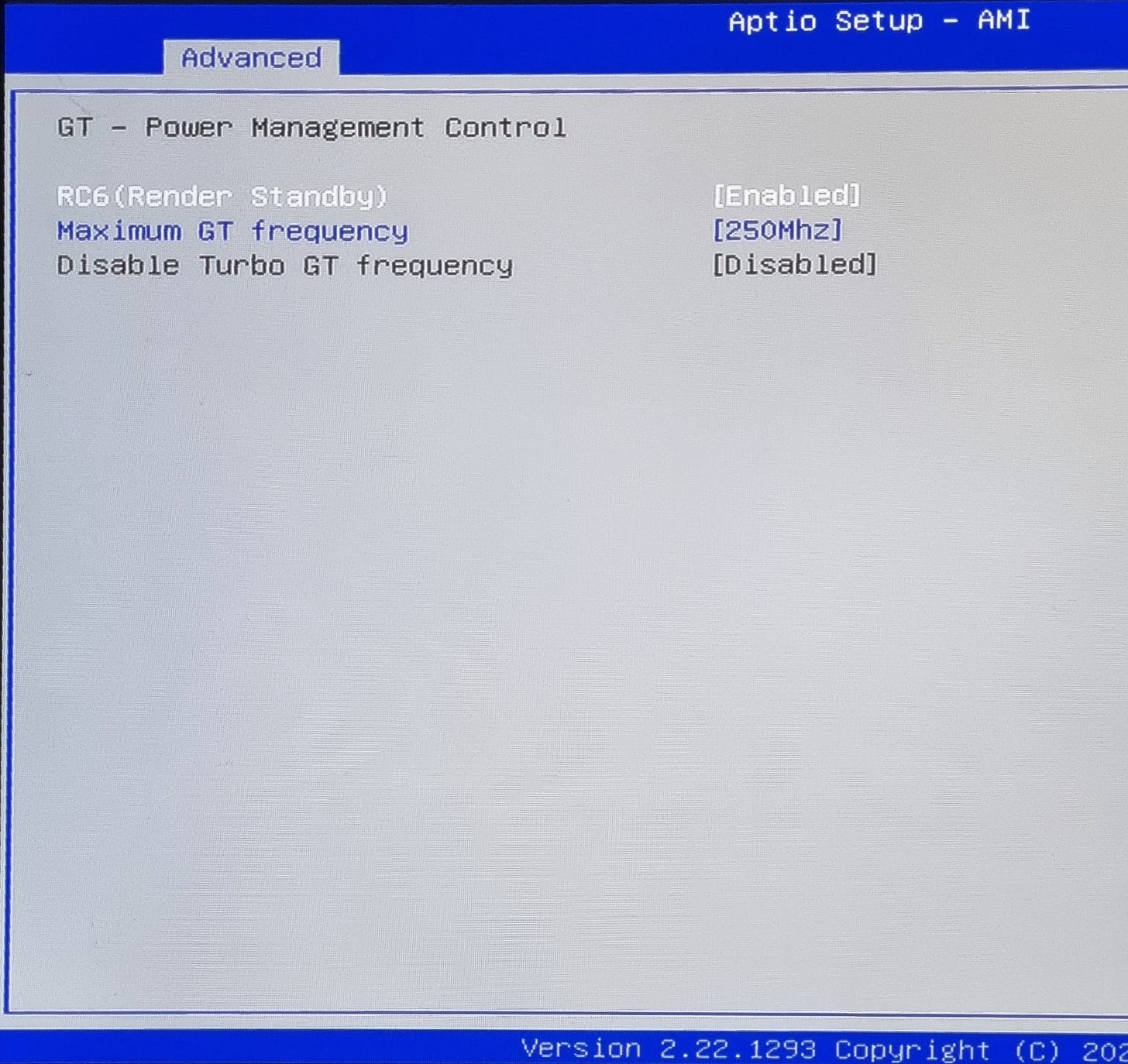

Next, since I’m using this as a headless NAS, I’ve nerfed the GPU frequency to 250 MHz - probably could have gone even lower:

Remember, without this tuning, the CPU reached its thermal shutdown limit of ~~105 degrees C on heavy loads. Now, the CPU stress test produces temperatures below 85 degrees C. Where previously it idled at around 70 degrees C, now it idles at around 55 degrees C. SSDs were previously heating up to over 70 degrees C, and now they are staying in the 40 degrees C - 60 degrees C range.

I would have been happier with even lower temperatures, but those numbers are not terrible.

Linux

The BIOS configuration described above leads to a MUCH more stable system than out of the box, but it still, rarely, reboots due to overheating.

For this, we can easily instruct Linux to set a cap on the maximum power state of the CPU, with

echo 75 > /sys/devices/system/cpu/intel_pstate/max_perf_pctTo make it run on reboot, we need to make a systemd service file, named /etc/systemd/system/max_pstate.service with these lines:

[Unit]

Description=Reduce max pstate

After=network.target network-online.target

[Service]

ExecStart=/bin/sh -c 'echo 75 > /sys/devices/system/cpu/intel_pstate/max_perf_pct'

Type=oneshot

[Install]

WantedBy=multi-user.target

(note that the ExecStart line is one big line).

To run this service, do:

service max_pstate startAnd to enable it to run every boot:

systemctl enable max_pstateWhat this does is effectively limit the CPU frequency to about 2.7 GHz.

The N150 CPU is capable of running up to 3.6 GHz, so this looks like a downgrade, but in practice, the thermals of the device are so bad I’ve never actually seen it rise over 3 GHz for more than 1 second in the stock configuration. Besides that, the top frequency is only achievable with a single core. Because of this, CPU benchmark results after applying this setting are nearly the same as before applying it.

The reason why the device reaches the thermal cutoff point is pretty much random fluctuations - even when the system load is constant, the CPU frequency will occasionally spike so much that the temperature overshoots pretty much instantly. This setting makes sure the frequency never overshoots what’s realistically the maximum for the device’s thermal design.

Verdict

I’ve been running this device for just 1 month now, but I only did the described power / cooling tunings recently. So far, it’s been stable, but the fact remains that the device doesn’t have an adequate thermal design. If the manufacturer resolves this issue in some next revision of the hardware, it would be a really neat device and something to recommend.

Stability tests

Here’s a summary of what I tested on the device so far:

Copied a 20 GB file back and forth over Samba to a compressed ZFS file system, over a 1 Gbps network

Run then OpenSSL speed benchmark (multithreaded) for 8 hours (recorded power consumption: 25W)

Doing the above 2 things at the same time instead of separately, for 4 hours.

I think that’s enough to declare the problem fixed.

Performance

For this particular setup, with 4x GIGABYTE Gen3 2500E 2TB SSDs in RAID-Z, I get single drive sequential read performance of 1.6 GB/s, and a “zpool scrub” rate of about the same 1.6 GB/s bandwidth (i.e. around 400 MB/s per drive). I’m not sure that’s a coincidence. Again, this is far more than the network interfaces can handle, so it’s acceptable.

Thanks for this article! A few weeks ago I started thinking about building an all-SSD little NAS, and found this device on AliExpress. After checking the reviews I found that some were quite positive, but there's been a lot of complaints about really bad thermals from reviewers and end users alike. But there really aren't any alternatives in this price class and size that don't have the same overheating issue, and I really really wanted to avoid spinning drives and fit into a rather small (but open to air) space.

But thanks to your post, I now believe the thermals can be reasonably managed, so I finally pulled the trigger on the G9.

I don't actually comment on this kind of posts but I'm very happy with you sharing your outcomes about testing with the Gmktec G9 as I was struggling to make the system stable and your last change, set the CPU performance to 75% was the key for me.

I tried a few things with less success (with my setup being TrueNAS Scale with 4x4TB NVMEs):

* Force ZFS to leave 2GB of free RAM instead of sucking all for ZFS cache

* Set all power options in bios to energy save

* Force the PCIe of the NVMEs to Gen 1 instead of Gen 3 (1.8GB/s to 400MB/s)

* Removing one of the 2 LAN cables (as I am running a bound ethernet interface)

* Set a USB fan directly aiming to the NVMEs without the plastic lid so it get better temps

I was able to down the temps to a more stable 45-50ºC range but the system was still rebooting. This makes me think the issue is more related with a weird power management problem with the mobo itself (not mine but as I can see with mosts) than a heat issue, but I didn't new I could stretch the CPU power in Linux using that command, and that made the system run stable with a very intensive read-write stress test for hours. Thank you very much!